KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24Improving speech intelligibility with Privacy-Preserving Augmented Reality

Improving speech intelligibility with Privacy-Preserving Augmented Reality

A Privacy-Preserving AR system can augment a speaker’s speech with real-life subtitles to overcome the loss of contextual cues caused by mask-wearing and social distancing during the COVID-19 pandemic.

Article | Spring 2022

Degraded speech intelligibility induces face-to-face conversation participants to speak more loudly and distinctively, exposing the content to potential eavesdroppers. Similarly, face masks deteriorate speech intelligibility, especially during the COVID-19 crisis. Augmented Reality (AR) can serve as an effective tool to visualise people’s conversations and promote speech intelligibility; this is known as speech augmentation. However, visualised conversations without proper privacy management can expose AR users to privacy risks.

An international research team of Prof. Lik-Hang LEE in the Department of Industrial and Systems Engineering at KAIST and Prof. Pan HUI in Computational Media and Arts at Hong Kong University of Science and Technology employed a conversation-oriented Contextual Integrity (CI) principle to develop a privacy-preserving AR framework for speech augmentation. At its core, the framework, named Theophany, establishes ad-hoc social networks between relevant conversation participants, allowing them to exchange contextual information and improving speech intelligibility in real-time.

Theophany has been implemented as a real-life subtitle application in AR to improve speech intelligibility in daily conversations (Figure 1). This implementation leverages a multi-modal channel, including eye-tracking, camera, and audio. Theophany transforms user speech into text and determines intended recipients through gaze detection. The CI Enforcer module evaluates sentence sensitivity. If the sensitivity meets the speaker’s privacy threshold, the sentence is transmitted to the appropriate recipients (Figure 2).

Based on the theory of Contextual Integrity (CI), parameters of privacy perception are designed for privacy-preserving face-to-face conversations; these parameters include topic, location, and participants. Accordingly, the operation of Theophany depends on the topic and session. Figure 3 demonstrates several illustrative conversation sessions: (a) topic is not sensitive and transmitted to everybody in user’s gaze; (b) topic is work-sensitive and only transmitted to coworker; and (c) topic is sensitive and only transmitted to friend in user’s gaze; (d) a new friend entering the user’s gaze gets only textual transcription once a new session (topic) starts; or (e) topic is highly sensitive, and nobody gets the textual transcription.

Theophany within a prototypical AR system augments the speaker’s speech with real-life subtitles to overcome the loss of contextual cues caused by mask-wearing and social distancing during the COVID-19 pandemic. This research was published in ACM Multimedia under the title of `Theophany: Multi-modal Speech Augmentation in Instantaneous Privacy Channels’ (DOI: 10.1145/3474085.3475507), and was selected as a best paper award candidate (Top 5). Note that the first author is an alumnus of the Industrial and Systems Engineering Department at KAIST.

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

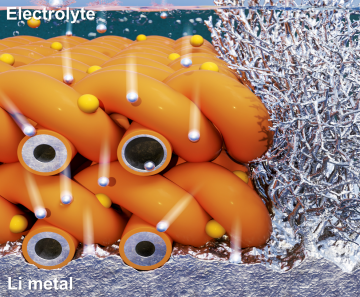

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more