KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Fall 2025 Vol. 25Researchers in Human-FACTS lab have developed a large language model-based multimodal warning (LLM-MW) system that personalizes driver assistance. The system adapts to diverse drivers and traffic conditions, offering tailored warnings through auditory, visual and haptic channels to enhance safety and comfort.

Large language models (LLMs), such as ChatGPT, have revolutionized artificial intelligence (AI), demonstrating remarkable capabilities in understanding context, reasoning, and generating human-like responses. This makes LLMs an ideal solution for a variety of real-world problems. In the field of driver assistance systems, there is a significant challenge: drivers exhibit varying hazard perception abilities and interaction preferences depending on their distinct characteristics, such as age, experience, and physical conditions. However, traditional driver assistance systems fail to adapt dynamically to individual driver needs, underscoring the need for a personalized and adaptive solution.

To address this challenge, researchers from the KAIST Human-FACTS Lab, led by Professor Tiantian Chen, have developed the LLM-based Multimodal Warning (LLM-MW) system, an innovative framework to personalize driver assistance through LLMs. By interpreting both the traffic environment and drivers' individual profiles, LLM-MW generates timely, personalized warnings tailored to each driver’s unique needs and preferences.

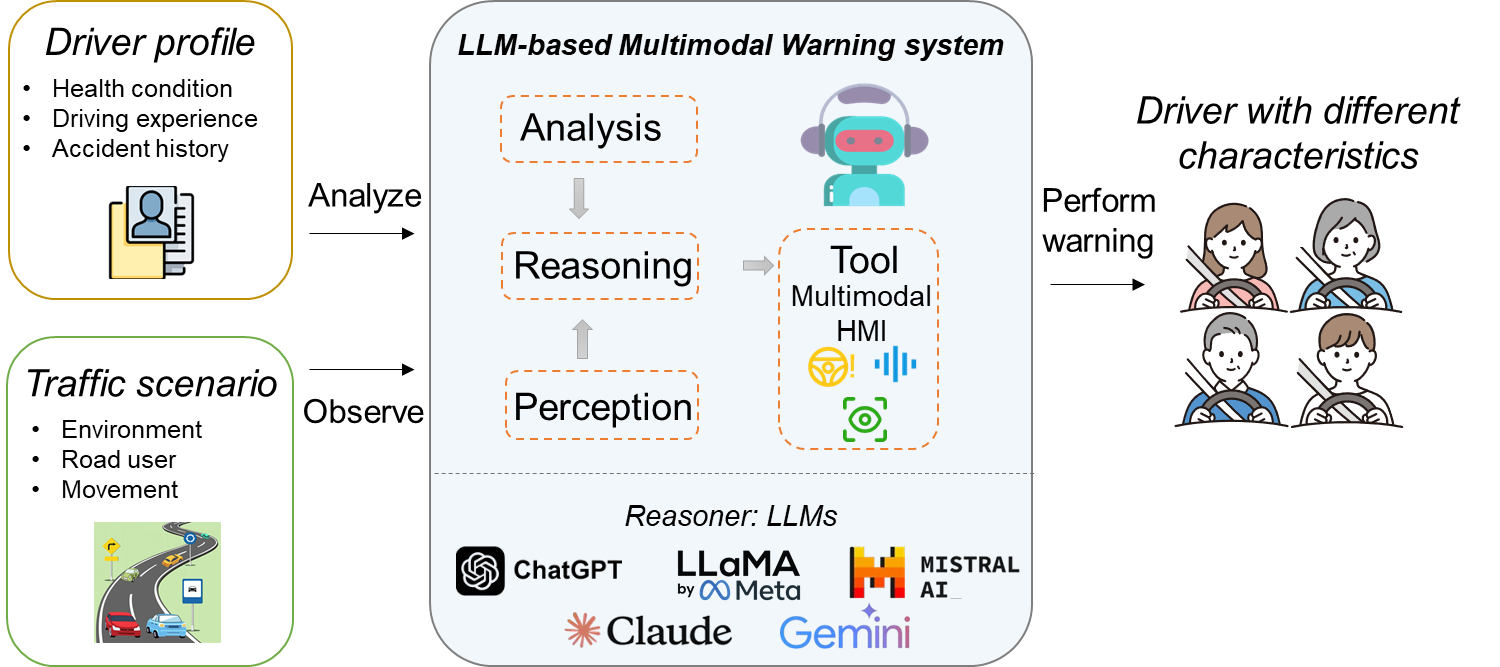

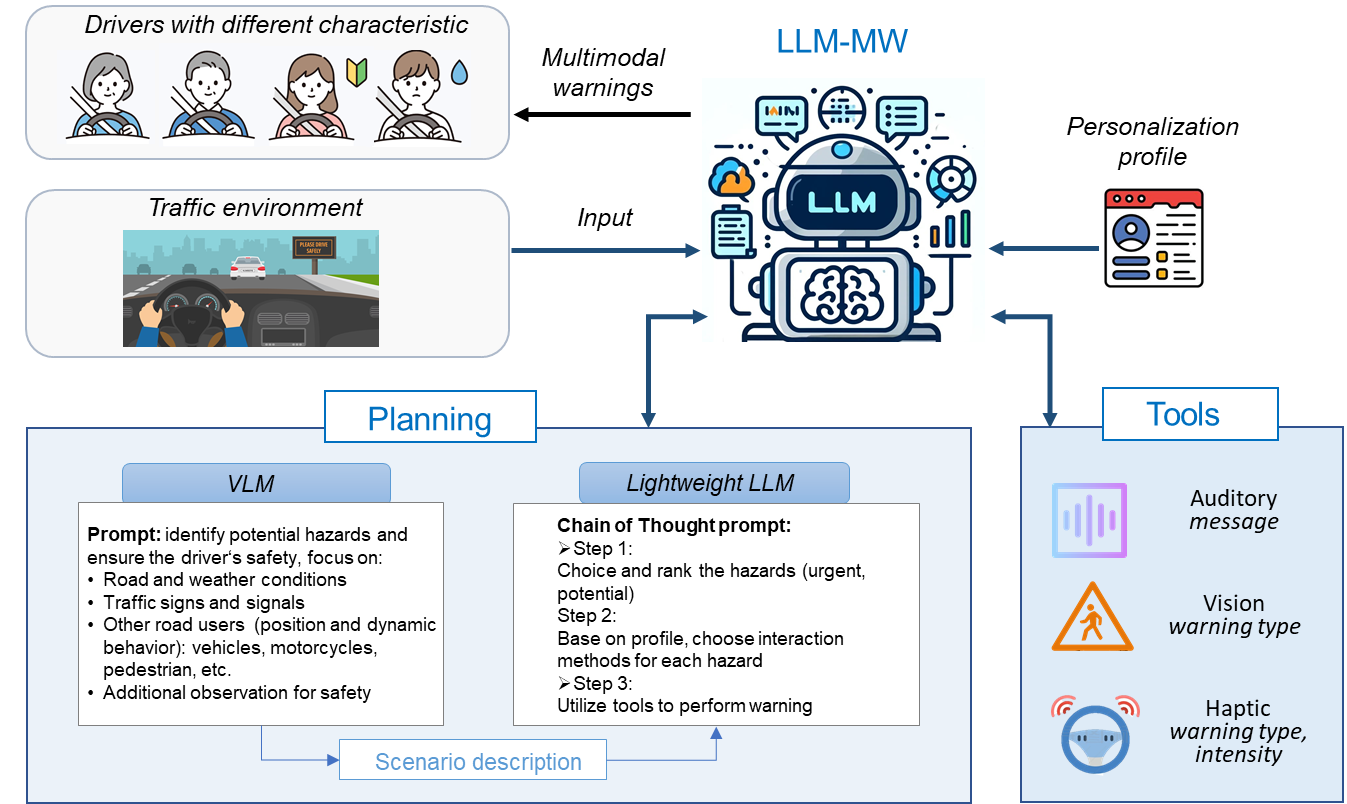

The LLM-MW system operates through interconnected modules, leveraging LLMs within an agent-based structure (Fig.1). After receiving the driver’s profile, which includes health condition, driving experience, and other attributes, the system creates a personalization profile using factor-centered retrieval-augmented generation (RAG) techniques. This method enables the LLM to integrate external domain-specific knowledge from the driver assistance field. The generated personalization profiles, which include driver feature analysis and interaction strategies, are used to customize the system's functionality. During operation, the system leverages a vision-language model (e.g., GPT-4 Vision) and a lightweight LLM (e.g., Mixtral-8x7B) to conduct step-by-step reasoning, analyzing traffic scenarios, identifying potential hazards, and designing personalized warnings that include warning content and interaction methods (Fig. 2). These warnings are then delivered through auditory, visual, and haptic tools to communicate with the driver.

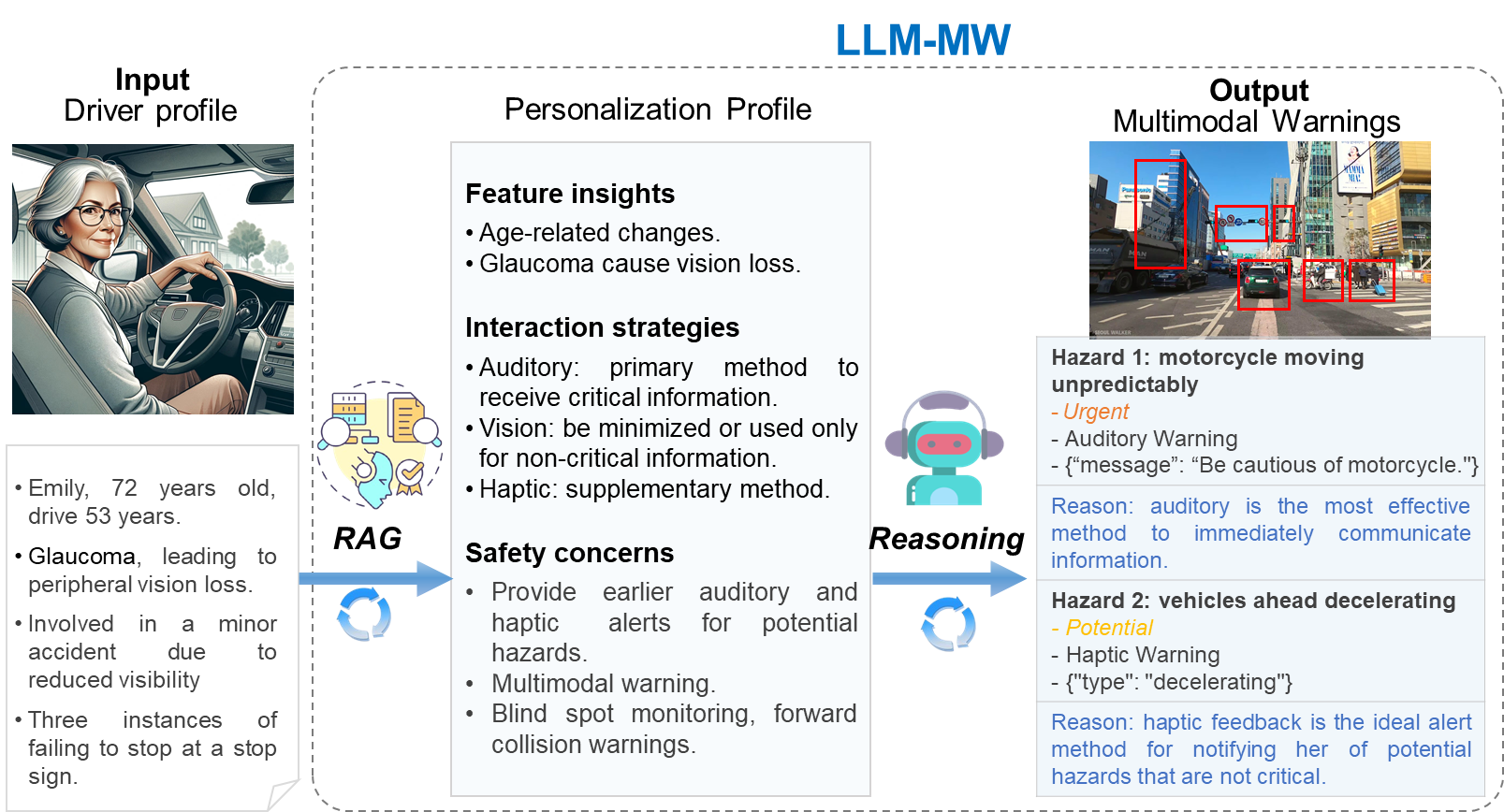

Figure 3 illustrates the performance of LLM-MW for Emily, a 72-year-old driver with glaucoma, in an intersection scenario. Considering her peripheral vision loss, the system prioritized auditory warnings as the primary communication method, supplemented by haptic feedback for urgent hazards, as shown in personalization profile. In the example scenario, LLM-MW delivered an auditory warning to convey critical information instantly, while issued haptic warning for non-critical hazards like decelerating vehicles ahead. This personalized approach ensured effective warning delivery, enhancing Emily's situational awareness of the traffic environment.

Looking ahead, LLM-MW represents a significant advancement in driver assistance technology with broad real-world applications. The system can serve as an intelligent co-pilot for various driver groups: providing culturally-aware guidance for international drivers, offering intuitive multimodal feedback for those with sensory limitations, and delivering adaptive support for professional drivers facing long hours behind the wheel. Beyond its immediate application in driver assistance, this research demonstrates how large language models can enhance human-machine interaction in intelligent vehicles, paving the way for more intuitive, personalized, and safer driving experiences.

This research will be published under the title "An LLM-based Multimodal Warning System for Driver Assistance" at the 27th IEEE International Conference on Intelligent Transportation Systems (ITSC 2024).

Most Popular

Wearable Haptics of Orthotropic Actuation for 3D Spatial Perception in Low-visibility Environment

Read more

Lighting the Lunar Night: KAIST Develops First Electrostatic Power Generator for the Moon

Read more

How AI Thinks: Understanding Visual Concept Formations in Deep Learning Models

Read more

Soft Airless Wheel for A Lunar Exploration Rover Inspired by Origami and Da Vinci Bridge Principles

Read more

TwinSpin: A Novel VR Controller Enabling In-Hand Rotation

Read more