Much of the research effort in robot manipulation is on enabling pick-and-place. However, not all objects can be grasped - some objects may be too big, thin, or heavy . In such cases, the robot needs to utilize non-grasping manipulation motions, such as pushing, toppling, and dragging, to move the object to the target location. Deep reinforcement learning algorithms are used to enable the robot with such capability.

Video : https://www.youtube.com/watch?v=SVUsKp_ij-U&ab_channel=JaehyungKim

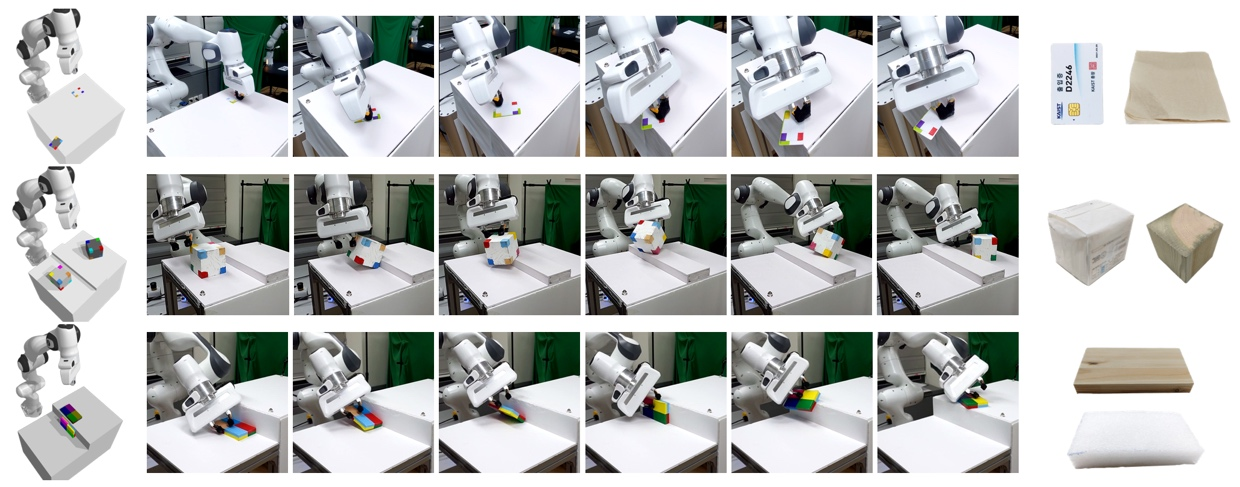

Humans possess remarkable skills in non-prehensile manipulation techniques such as pulling, pushing, dragging, and tumbling, which enables them to handle objects that are hard or impossible to grasp. In contrast, most robots are only capable of performing pick-and-place and fail in situations where grasping is not an option due to physical or geometric limitations. This study’s objective is to equip robots with the ability to manipulate objects even in such circumstances, using a basic gripper and an RGB camera as shown in the figure above. This is a challenging problem that involves planning a sequence of combined robot and object movements while accounting for the contact interactions among an object, the robot, and the environment. Since the robot is equipped with a simple gripper, it must leverage gravity and the environment's geometry to perform the task effectively.

The state-of-the-art algorithms for such problems are planning algorithms that use tree search or trajectory optimization based on an analytical physics model. However, their applicability in the real world is limited by several factors. First, their search space is extremely complex, involving both discrete contact activations and continuous robot and object motions, with different sub-manifolds defined by different motion constraints. This makes planners too slow to use in practice. Second, they require accurate analytical contact models of the world, which is non-trivial to define as rigid-body dynamics are often insufficient. Moreover, even if the governing equation of contacts can be defined, extracting the physical parameters for instantiating the equation, such as the center of mass or friction coefficient, from high-dimensional RGB images is challenging.

Because of these limitations, planning algorithms have only been applied in a controlled setup where all necessary information about the object, such as inertial parameters or pose, is known. To achieve reasonable planning time, strong assumptions are often used with contact interactions, robot motions, and transition dynamics, such as quasi-static dynamics, predefined contact modes (slipping, static, rolling, etc.), and predefined contact locations in the environment, the object, and the robot.

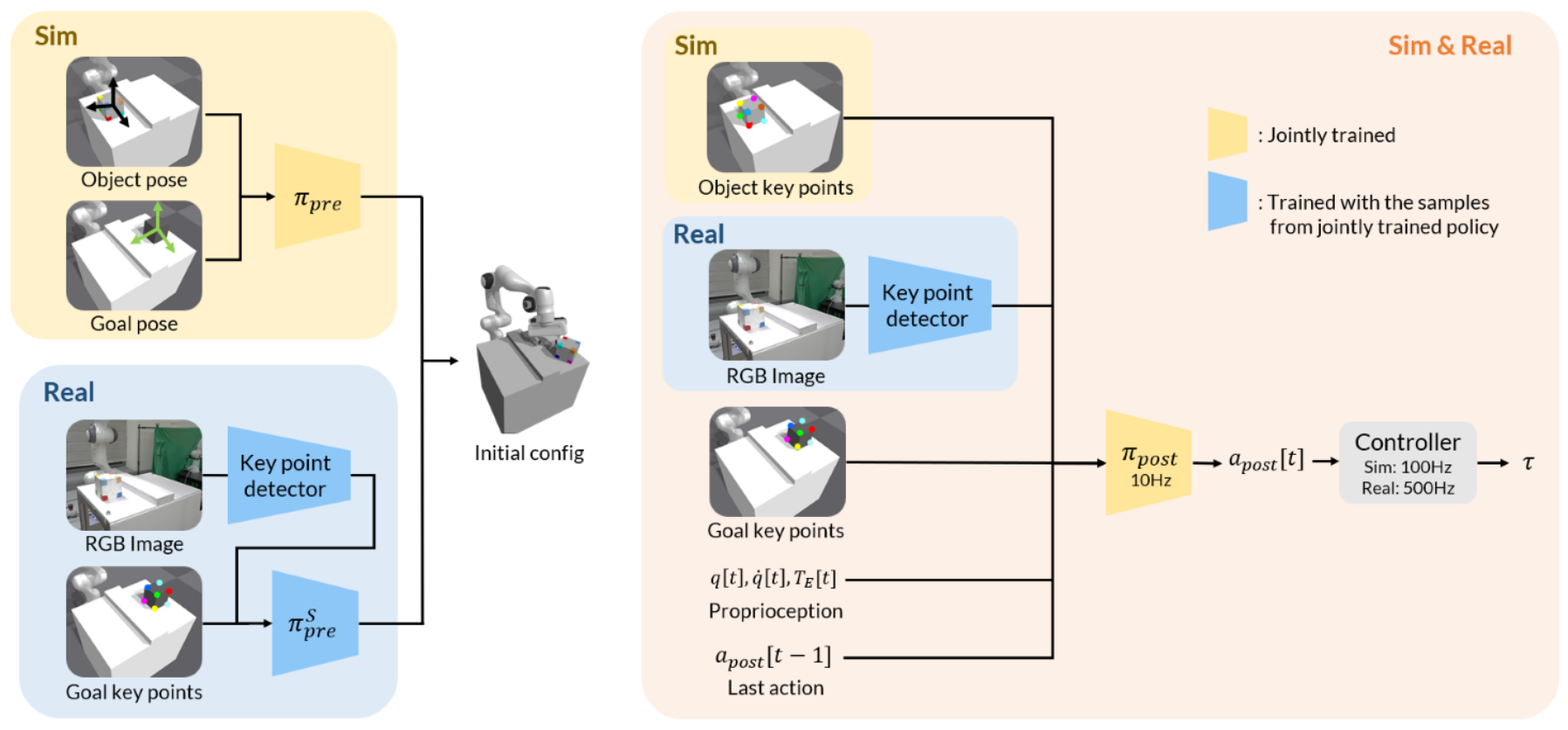

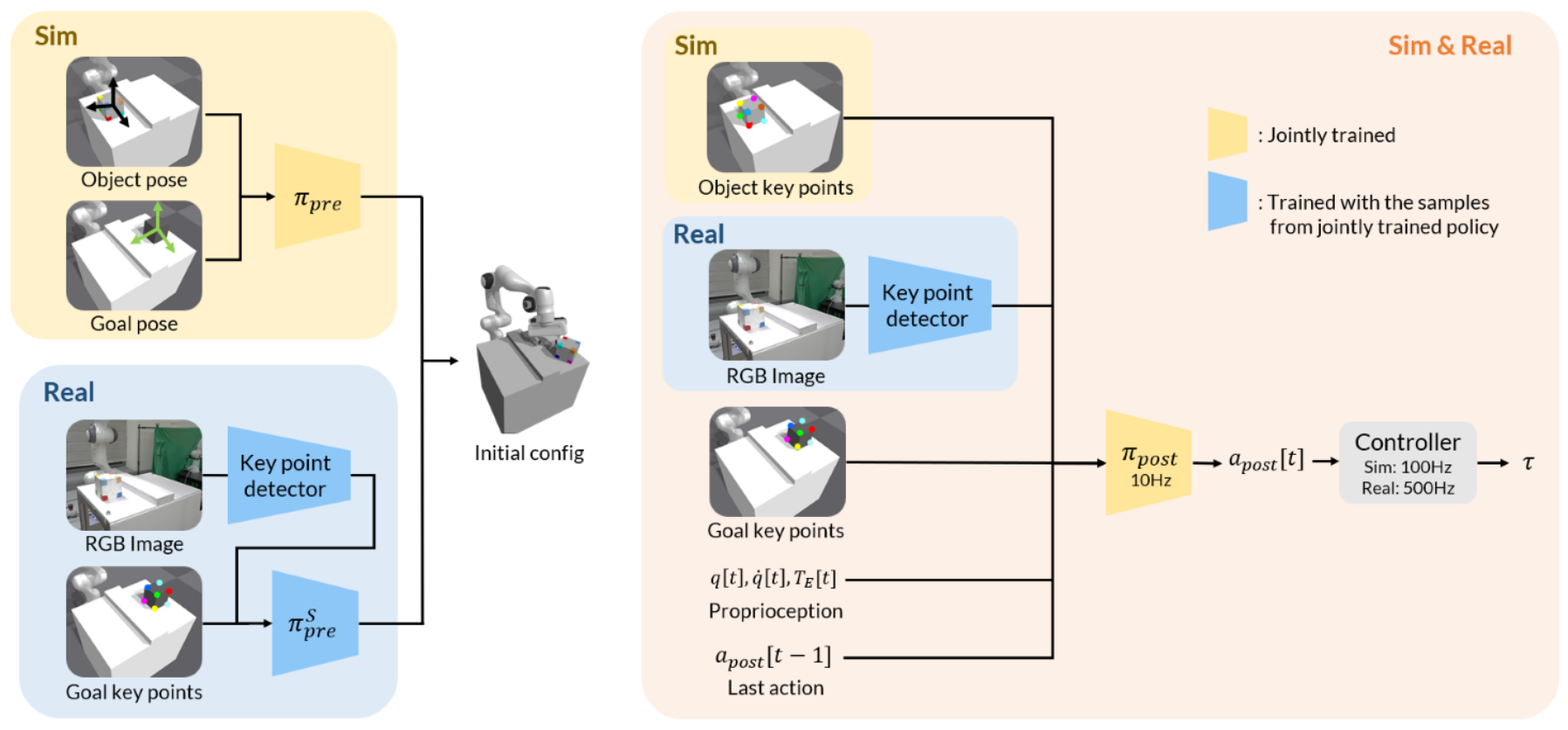

An alternative solution is proposed for non-prehensile manipulation that utilizes deep reinforcement learning (RL) to handle these challenges. By directly learning the representation that implicitly encodes the necessary information from sensory data, the proposed approach eliminates the need to extract difficult-to-observe object inertial parameters. At the expense of offline training, the online computation is reduced to feed-forward predictions from a neural network rather than searching in a complex space. Notably, the approach does not make assumptions about contact interactions and robot or object motions, making the solution more versatile than traditional planning algorithms.