KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2026 Vol. 26Advanced OOD detection for secure and reliable AI applications

KAIST research team led by Professor Jong-Seok Lee discovered that gently sloped and extended classification margins can relax overconfidence in out-of-distribution samples. The proposed method is expected to be effectively utilized in critical AI applications, such as autonomous driving and medical diagnosis, where safety and lives are directly impacted.

Out-of-distribution (OOD) detection is important in machine learning, particularly as AI systems are increasingly deployed in real-world applications where they encounter diverse and unpredictable data. This detection mechanism helps identify data points that deviate significantly from the training distribution, which is essential for maintaining the reliability and safety of AI systems. By detecting these anomalies, OOD systems prevent inappropriate or unsafe AI behaviors that could arise from unexpected inputs. This capability is especially important in critical applications like autonomous driving, medical diagnosis, and financial fraud detection, where errors due to unanticipated data can have serious consequences.

Existing studies on OOD detection can be broadly classified into inference-phase approaches and training-phase approaches. The former aims to achieve a gently sloped classification margin, while the latter seeks to create an embedding space that increases inter-class discrepancy, thereby reducing overconfidence in OOD samples. Based on these insights, Professor Jong-Seok Lee’s research team from the Department of Industrial and Systems Engineering at KAIST has developed a novel method that combines the strengths of both approaches. Furthermore, the method incorporates intra-class compactness, which is also important for OOD detection. As a result, the new method not only enables effective OOD detection but also overcomes the limitations of existing approaches, such as slow inference and low OOD detection accuracy in inference-phase methods, and the need for auxiliary data or accuracy drops in training-phase methods.

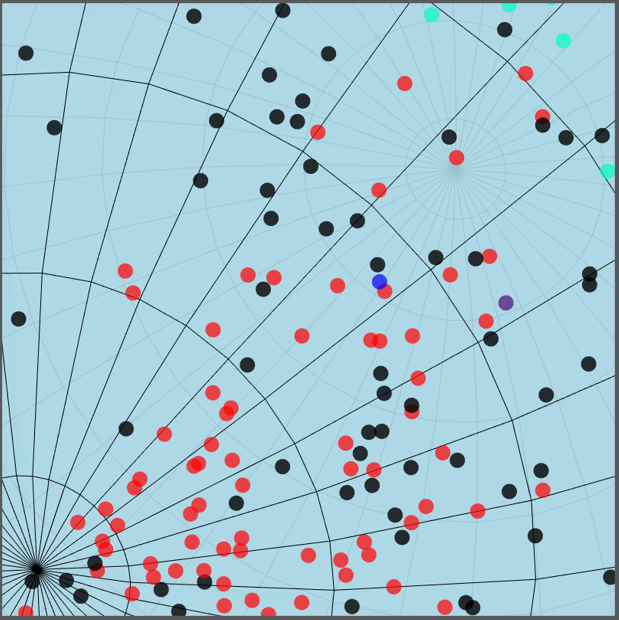

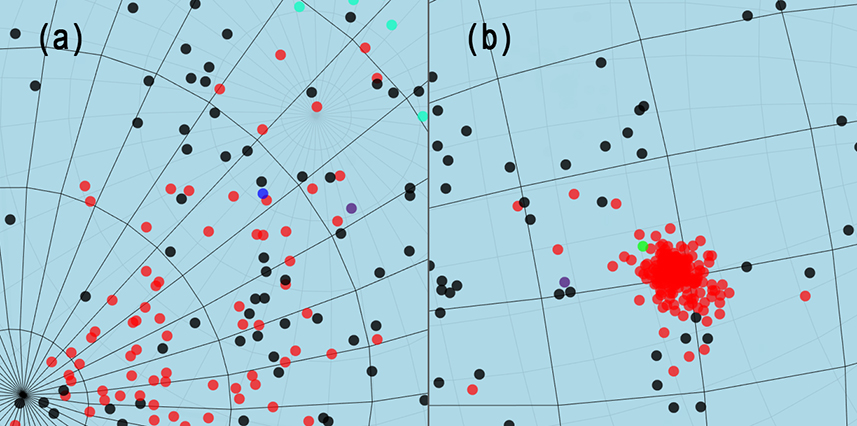

To enhance inter-class discrepancy and intra-class compactness—thereby creating a more discriminative embedding space—the research team proposed using angular margin loss instead of the commonly used softmax loss for neural network training. Fig. 1 demonstrates the effectiveness of this idea. When angular margin loss is used (Fig. 1(b)), the in-distribution (ID) samples are more tightly clustered compared to when softmax loss is used (Fig. 1(a)). This indicates that the classification margins are extended, enabling clear distinction between OOD samples and ID samples.

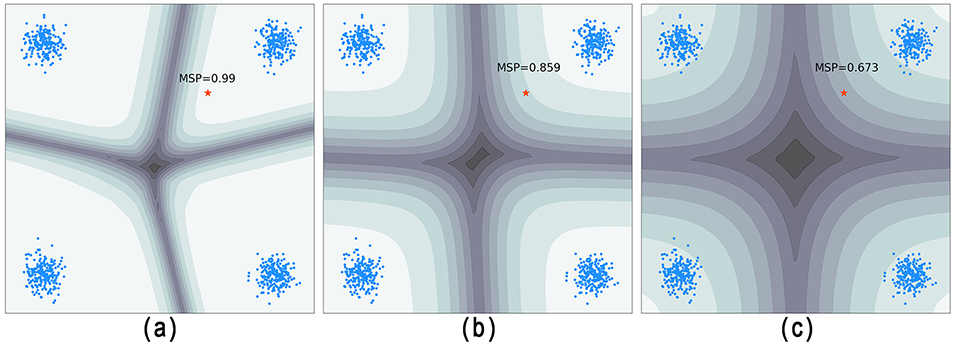

To create a gently sloped margin, a weight regularization method was employed. As shown in Fig. 2, when no weight regularization was applied (Fig. 2(a)), the classification boundary was steep. However, as weight regularization was increasingly imposed—moderately in (Fig. 2(b)) and more strongly in (Fig. 2(c))—the classification boundary became progressively gentler. This resulted in lower prediction values for the OOD sample, marked with the red star, as the regularization strength increased. This approach effectively prevents overconfident predictions for OOD samples.

The proposed method demonstrated the best OOD detection performance compared to existing state-of-the-art methods in the experiments. Furthermore, the method maintained excellent classification performance while achieving the shortest training and inference times, highlighting its computational efficiency. This research has been recently accepted at IEEE Transactions on Neural Networks and Learning Systems and published as an early access article.

Most Popular

Soft Airless Wheel for A Lunar Exploration Rover Inspired by Origami and Da Vinci Bridge Principles

Read more

Dual-Action Hydrogel Offers New Hope for Rheumatoid Arthritis Treatment

Read more

Wearable Haptics of Orthotropic Actuation for 3D Spatial Perception in Low-visibility Environment

Read more

Lighting the Lunar Night: KAIST Develops First Electrostatic Power Generator for the Moon

Read more

GPU-NPU-PIM Integration Technology for Generative AI Clouds

Read more