KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24Image-space filtering and error analysis enable an effective adaptive process for high-quality rendering

Image-space filtering and error analysis enable an effective adaptive process for high-quality rendering

The proposed image-space filtering is based on novel theoretical analysis and enables effective yet efficient adaptive process for high-quality rendering. Furthermore, it achieves state-of-the-art results and can be integrated with existing rendering systems.

Article | Spring 2016

Rendering that generates images from three-dimensional scenes is one of the fundamental tools in computer graphics. Many computer graphics applications use one of two rendering algorithms – rasterization and ray tracing – which are representative rendering techniques designed for high performance and high quality, respectively. Rasterization methods have been widely used for interactive applications such as games thanks to their performance. On the other hand, ray tracing, especially Monte Carlo (MC) based path tracing, generates physically correct images while supporting a wide variety of rendering effects and material properties. Recently, raytracing-based techniques are more widely adopted for various applications. Nonetheless, raytracing-based techniques are still too slow to be used in many different applications including games.

There have been a significant amount of research efforts to improve the performance and quality of Monte Carlo based ray tracing techniques. Recently, a KAIST graphics lab directed by Prof. Sung-Eui Yoon designed an effective and efficient image-space adaptive rendering technique to achieve greatly improved rendering quality for MC-based ray tracing techniques.

MC-based rendering techniques use random samples per pixel to compute a color in the image space to solve the rendering equation, which explains the physical interaction between the light and materials. In practice, hundreds of ray samples per pixel are required to compute high-quality rendering images. Otherwise, one can see a significant level of noise in images due to the randomized nature of Monte Carlo techniques.

To reduce the noise of images generated by MC ray tracing images, one can compute an average value with samples within a filtering window and use the value as a filtered color value for each pixel. This approach to working in the image space is known to perform rapidly, but can generate over-smoothed image regions while failing to filter out noise in other parts of images, depending on the filtering parameters (e.g., filtering bandwidth).

The main idea of the proposed image-space adaptive rendering technique is to perform a weighted local regression with ray samples for each pixel and find the optimal filtering bandwidth that minimizes the filtering error. The novel approach is based on fitting a linear model with samples for each pixel and conducts theoretical analysis to compute the representative color with the minimum error. This approach then enables adaptive sampling, which generates more ray samples on image regions with higher filtering errors, resulting in faster convergence to the ground truth images that can be acquired with an infinite number of ray samples. Thanks to the robust analysis and the optimization process. This approach achieves state-of-the-art results compared to prior methods [1].

This technique has been further improved to filter out multiple pixels with a single, predicted linear model, while the aforementioned method uses a single model per pixel. This technique has been demonstrated to show a near-interactive performance even with a path tracing method [2].

In addition to the theoretical benefits, this line of research direction is promising since the proposed method works in the image space and, thus, can be easily integrated with existing ray tracing rendering systems. Thanks to such characteristics, a recent SIGGRAPH tutorial was given on this topic [3]. The source code of the proposed image-space adaptive rendering method is available on the project webpage [1].

[1] Adaptive Rendering based on Weighted Local Regression

Bochang Moon, Nathan Carr, and Sung-Eui Yoon

ACM Transactions on Graphics, 2014 (Presented at ACM SIGGRAPH 15)

http://sglab.kaist.ac.kr/WLR/

[2] Adaptive Rendering with Linear Predictions

Bochang Moon, Jose A. Iglesias-Guitian, Sung-Eui Yoon, Kenny Mitchell

ACM SIGGRAPH (Tran. on Graphics), 2015

[3] Denoising Your Monte Carlo Renders: Recent Advances in Image-Space Adaptive Sampling and Reconstruction, Nima Kalantari, Fabrice Rousselle, Pradeep Sen, Sung-Eui Yoon, and Matthias Zwicker, ACM SIGGRAPH 2015 (Tutorial)

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

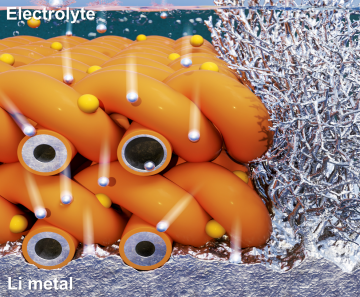

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more

![Equal-time comparison: The left image is generated by Monte Carlo path tracing with 143 samples per pixel (spp) in the San Miguel scene. The right image is generated by a novel image-space filtering and error analysis method in the equal-time setting with the left image. The new result is visually and numerally superior to the left image. Note that across defocused and focused regions, the proposed method filters out noise while maintaining sharp edges. The images are from the presentation slides of [1].](http://breakthroughs.kaist.ac.kr/wp/wp-content/uploads/2016/02/01-2.jpg)