KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24ExpressPass: Credit-scheduled delay-bounded congestion control in datacenters

ExpressPass: Credit-scheduled delay-bounded congestion control in datacenters

In datacenters, when many hosts communicate simultaneously, large queueing delay dominates end-to-end latency. A novel technology, ExpressPass, is developed which bounds the network queue and thus achieves ultra-low end-to-end latency using credit packets. ExpressPass may replace existing datacenter congestion control without hardware modification.

Article | Spring 2018

Suppose you search for some information using a search engine. When you type a query and hit enter, the query starts its journey to the datacenter where a single task is often divided and distributed to many servers. While communicating with many different servers in the datacenter, congestion frequently occurs and packets can be delayed as a result. The technique to control such congestion and reduce the end-to-end latency is called ‘congestion control’.

However, it is challenging for a congestion control to achieve both short end-to-end latency to maximize user’s experience and high link utilization to maximize resource usage in the datacenter.

Some congestion controls such as RCP and DCTCP target high link utilization. However, with an effort to maximize the link utilization, the network queueing increases with a negative impact on the end-to-end latency and users’ experience. Other congestion controls such as DX and HULL aim low end-to-end latency, but their effort to reduce the network queueing often result in low link utilization.

Recently, Prof. Dongsu Han’s research team (Inho Cho, Dr. Keon Jang, Prof. Dongsu Han) has succeeded in developing a novel means of congestion control, ExpressPass, which achieves both short end-to-end latency and high link utilization. ExpressPass introduces the notion of a credit packet which is a specially designed packet to control the transmission of a data packet. When a sender wants to transmit a data packet, it must receive a credit packet. To control the congestion, credit packets are then rate-limited in the network by the switches.

The use of credit packets enables ExpressPass to proactively respond to congestion. By rate-limiting the credit packets in the network, it ensures lossless data delivery in large-scale datacenters. In addition, it guarantees bounded queuing. The analysis shows the network queue is bounded regardless of traffic distribution. Both testbed experiments and simulations confirm that ExpressPass reduces the network queue occupancy by up to 92%.

ExpressPass can be implemented without hardware modifications. It only requires a change in end-hosts’ network stack and configurations of the switch, while most of the recent congestion control requires hardware modifications to be deployed in the datacenter. Therefore, the existing datacenters can adopt ExpressPass to enhance users’ experience while maximizing resource usage with minimum cost.

ExpressPass was published and presented at one of the top conferences on computer networks, the ACM Special Interest Group on Data Communications (SIGCOMM), in August 2017 and gathered great interest from companies operating large-scale datacenters such as Google and Huawei.

Original paper: https://dl.acm.org/citation.cfm?id=3098840

Ns-2 simulation code: https://github.com/kaist-ina/ns2-xpass

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

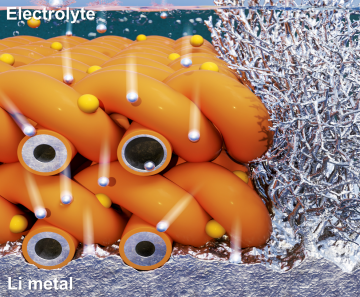

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more