KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24Reinforcement learning algorithms tell us about how the human brain implements meta-reinforcement learning

Reinforcement learning algorithms tell us about how the human brain implements meta-reinforcement learning

Meta-reinforcement learning algorithms help us understand how the human brain learns to resolve complexity and uncertainty of the task. This study provides a glimpse of how it might ultimately be possible to use computational models to reverse engineer human reinforcement learning.

Article | Spring 2020

A research team, led by Prof. Sang Wan Lee’s lab at KAIST in collaboration with John O’Doherty at Caltech, succeeded in discovering both a computational and neural mechanism for human meta-reinforcement learning, opening up a possibility for porting key elements of human intelligence into artificial intelligence algorithms. This work was published on Dec 16, 2019 in the journal Nature Communications under the title, “Task complexity interacts with state-space uncertainty in the arbitration between model-based and model-free learning”.

Human reinforcement learning is an inherently complex and dynamic process, involving goal setting, strategy choice, action selection, strategy modification, cognitive resource allocation, etc. To make matters worse, humans often need to rapidly make important decisions even before getting the opportunity to collect sufficient information, unlike when using deep learning methods to model learning and decision-making in artificial intelligence applications.

The team used a technique called “reinforcement learning theory-based experiment design” to optimize the three variables of the two-stage Markov decision task: goal, task complexity, and task uncertainty. This experimental design technique allowed the team to not only control confounding factors, but also to create a situation similar to that which occurs in actual human problem solving. Secondly, the team used a technique called “model-based neuroimaging analysis”. Based on the acquired behavior and fMRI data, more than 100 different types of meta-reinforcement learning algorithms were pitted against each other to find a computational model that can explain both behavioral and neural data. Thirdly, the team applied an analytical method called “parameter recovery analysis”, involving high-precision behavioral profiling of both human subjects and computational models. In this way, the team was able to accurately identify a computational model of meta-reinforcement learning, making sure not only that the model’s apparent behavior is similar to that of humans, but also that the model solves the problem in the same way as humans do.

The team found that people tended to increase planning-based reinforcement learning (called model-based control), in response to increasing task complexity. However, they resorted to a simpler, more resource-efficient strategy called model-free control when both uncertainty and task complexity were high. This suggests that both the task uncertainty and the task complexity interact during the meta control of reinforcement learning. Computational fMRI analyses revealed that task complexity interacts with neural representations of the reliability of the learning strategies in the inferior prefrontal cortex.

These findings significantly advance the understanding of the nature of the computations being implemented in the inferior prefrontal cortex during meta-reinforcement learning and provide insight into the more general question of how the brain resolves uncertainty and complexity in a dynamically changing environment. Furthermore, gaining an insight of how meta-reinforcement learning works in the human brain will be of enormous interest to researchers in both the artificial intelligence and human/computer interaction fields, due to the potential of applying core insights gleaned on how human intelligence works to AI algorithms.

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

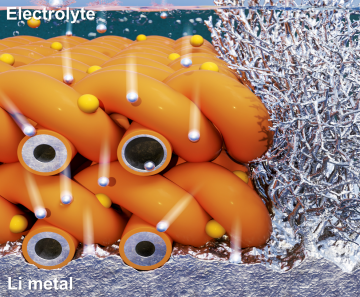

Extending the lifespan of next-generation lithium metal batteries with water

Read more

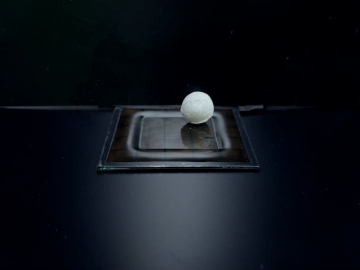

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more