KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24I-Keyboard : fully imaginary keyboard on touch devices empowered by a deep neural decoder

I-Keyboard : fully imaginary keyboard on touch devices empowered by a deep neural decoder

An I-Keyboard – a fully imaginary keyboard on touch devices empowered by a deep neural decoder – was developed. The invisibility of I-Keyboard maximizes the usability of mobile devices, and the decoder allows users to start typing from any position on a touch screen at any angle without any calibration step. I-Keyboard showed 18.95% and 4.06% increases in typing speed (45.57 WPM) and accuracy (95.84%), respectively, over conventional imaginary keyboards.

Article | Spring 2020

The birth of mobile and ubiquitous computing has prompted the development of soft keyboards. Contemporary soft keyboards possess a few limitations. First, the lack of tactile feedback increases the rate of typos. Second, soft keyboards hinder mobile devices from presenting enough content because they occupy a relatively large portion of the display screen (up to 40%).

Mr. Kim Ue-Hwan, Mr. Yoo Sahng-Min and Prof. Kim Jong-Hwan in the KAIST Robot Intelligent Technology Lab of the School of Electrical Engineering at the Korea Advanced Institute of Science and Technology (KAIST) developed an imaginary keyboard (I-Keyboard) to tackle the above-mentioned limitations of soft keyboards. I-Keyboard is invisible, which maximizes the utility of the screens on mobile devices. Moreover, I-Keyboard does not have a pre-defined layout, shape, or size of the keys. Next, I-Keyboard comes with a DL-based decoding algorithm, which does not require a calibration step. The proposed deep neural decoder (DND) effectively handles both hand-drift and tap variability and dynamically translates the touch points into words.

The research team conducted a user study to analyze the user behaviors and collect data to train DND (7,245 phrases and 196,194 keystroke instances, the largest dataset for developing imaginary keyboards). The research team extracted the keyboard mental model of each participant for analysis (Fig. 1). The key finding was that each mental model consistently resembles the physical keyboard layout although each user recognizes the keyboard layout in mentally different ways.

After analyzing the user data, a decoder was designed. The decoder consists of two main components: statistical and semantic (character language model) decoders. The Statistical Decoding Layer converts user input into text using touch position information reflecting what the user mistyped (for example, typing ‘a’ for ‘s’ resulting in ‘spple’ while typing ‘apple’). The Character Language Model then determines the semantic information between the characters and corrects mistyped content (for example, turning ‘spple’ into ‘apple’). Through these two processes, the Deep Neural Decoder works robustly with user’s hand position movement and scale variation (Table 1). The accuracy of the deep neural decoder was 95.84% (world best), while the conventional algorithm achieved accuracy of 91.27%.

Table 1. Decoding examples (G.T. stands for ground-truth).

The researchers’ findings opened the possibility that I-Keyboard would create new value for the mobile computing industry since it could transform the way users enter text and related applications This research was published on November 28, 2019 in the IEEE Transactions on Cybernetics under the title, “I-Keyboard: Fully Imaginary Keyboard on Touch Devices Empowered by Deep Neural Decoder” (DOI 10.1109/TCYB.2019.2952391).

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

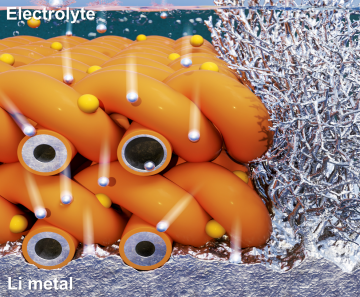

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more