KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Fall 2025 Vol. 25TensorDIMM: An AI accelerator for personalized recommendation algorithms based on processing-in-memory

TensorDIMM: An AI accelerator for personalized recommendation algorithms based on processing-in-memory

TensorDIMM is the first architectural solution tackling sparse embedding layers of personalized recommendation systems. Our solution is based on a practical processing-in-memory architecture that fundamentally addresses the memory capacity and bandwidth challenges of sparse embedding layers widely employed in recommendation systems, natural language processing, and speech recognition.

Article | Fall 2020

Personalized recommendation systems are becoming increasingly popular in today’s datacenters as they power numerous application domains such as online advertisement, movie/music recommendations, e-commerce, and news feeds. As such, accelerating recommendation systems for high performance and high energy-efficiency is becoming increasingly important for hyperscalers such as Google, Facebook, Amazon, and Microsoft This is occurring because the performance of recommendation systems are directly correlated with the hyperscaler’s revenue, rendering the overall quality-of-service (QoS) provided to end consumers vital. A key challenge in deploying recommendation systems, however, is the excessive memory footprint and limited memory bandwidth available for executing recommendation algorithms, incurring several tens to thousands of GBs of memory usage over several, large “embedding tables”.

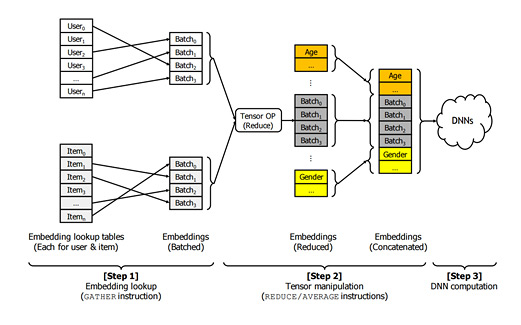

An “embedding” is a learned continuous vector representation in a low-dimensional latent space, which is projected from a personalized categorical feature (e.g., preferred movie genre, or food). A recommendation system manages the embeddings as a formation of a table, called an embedding table, and ultimately predicts the possibility of a certain event by combining the embedding features [Figure 1].

For example, YouTube or Netflix predicts the probability of a user watching a recommended video clip. Considering that there are countless categorical features and users, the recommendation system can have plenty of embedding tables, which can amount to several tens to thousands of GBs. This is the reason why the datacenters suffer from the excessive memory footprint of the recommendation system.

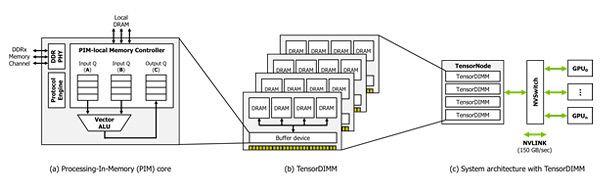

Prof. Minsoo Rhu and his research team at the school of Electrical Engineering at KAIST have developed TensorDIMM, the first architectural solution tackling the memory capacity and bandwidth challenges of embedding layers employed in personalized recommendation systems [Figure 2].

The team observed that the tensor operation applied to the contents fetched from the embedding tables are simple, element-wise vector operations. Because of the way computer systems are built these days, existing computing platforms must fetch all the embedding vectors from the external, off-chip memory into the internals of the processor to execute the operation, easily becoming bottlenecked by the low off-chip data transfer and memory bandwidth.

TensorDIMM takes a completely different approach, employing a processing-in-memory (PIM) solution where the tensor operations targeting embedding vectors are conducted directly “near/inside” the memory modules, rather than fetching them all the way to the main processor die.

This allows the effective memory bandwidth utilized in conducting tensor operations over embeddings to be drastically improved, fundamentally addressing the memory bandwidth obstacles of sparse embedding layers. Additionally, TensorDIMM employs a disaggregated memory based system architectural solution, allowing the available memory capacity to be drastically enhanced.

This research was published as a part of a conference proceeding paper at The 52nd IEEE/ACM International Symposium on Microarchitecture (MICRO-52) under the title, “TensorDIMM: A Practical Near-Memory Processing Architecture for Embeddings and Tensor Operations in Deep Learning”.

The work was subsequently selected for IEEE Micro Top Picks – Honorable Mention (“IEEE Micro – Special Issue on Top Picks from the 2019 Computer Architecture Conferences”), which acknowledges research papers having high novelty and potential for long-term impact, the first of its kind from KAIST.

Most Popular

Wearable Haptics of Orthotropic Actuation for 3D Spatial Perception in Low-visibility Environment

Read more

Soft Airless Wheel for A Lunar Exploration Rover Inspired by Origami and Da Vinci Bridge Principles

Read more

TwinSpin: A Novel VR Controller Enabling In-Hand Rotation

Read more

How AI Thinks: Understanding Visual Concept Formations in Deep Learning Models

Read more

Title WSF1 Vision Concept: Redefining Wearable Robotics through Human-centred Mobility Design

Read more