KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24A deep-learned E-skin decodes complex human motion

A deep-learnd single-strained electronic skin sensor can capture human motion from a distance with only a single sensor. The sensor placed on the surface of the skin decodes complex five-finger motions in real time.

Article | Fall 2020

Capturing body motions is quite costly, in general, as conventional approaches require many sensor networks that cover all of the curvilinear surfaces of the target area. To understand the entire system, we should be able to capture the statuses of different components of the system by extracting various types of signals they provide. However, it is impractical to place sensors at every part of the system due to high cost and inefficiency. Rather than affixing sensors to every joint and muscle, pinpointing just a single area that can extract signals from multiple areas will decrease costs and effort.

Advanced technologies, like global seismographic networks and radio telescope systems, provide an efficient approach by collecting the signals from highly sensitive detectors at positions where signals converge. This allows decoupling and integration of the information that is entangled in the converged signals into one frame of knowledge for an accurate observation of the entire system. Bringing the knowledge from the advanced technologies, the research team suggested placing a highly sensitive skin-like sensor at a certain point on our body where the signals converge and use deep learning techniques to accurately decouple the converged signals.

The research team led by Prof. Sungho Jo presented a new sensing paradigm for motion tracking. With laser patterned silver nanoparticles, the sensor can be unobtrusively attached to the skin and sensitively collect the converged signals. The machine intelligence unveils the motions deep inside the coupled signals.

A single deep- learned sensor successfully decoded finger motions in a real-time demonstration with a virtual 3D hand that mirrors the original motions. The Rapid Situation Learning (RSL) system collects data from arbitrary parts of the wrist and automatically trains the model in real- time, so that the model can adopt to new signal patterns from different users or attached areas.

Furthermore, the same concept can be expandable to identify other human motions, such as gait motions from the pelvis and keyboard typing from the wrist. This sensory system is anticipated to track the motion of the entire body with minimum sensory network and facilitate the indirect remote measurement of human motions, which is applicable to wearable VR/AR systems.

This research was published in Nature Communications on May 1, 2020 under the title, “A deep-learned skin sensor decoding the epicentral human motions”.

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

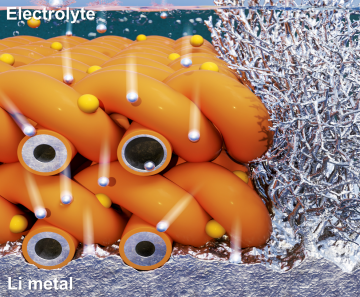

Extending the lifespan of next-generation lithium metal batteries with water

Read more

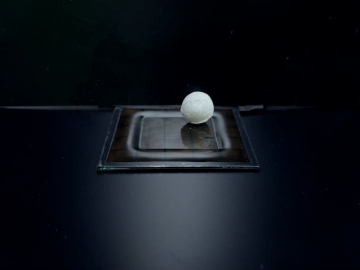

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more