KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24How to Ask Questions “Wisely” to People to Maximize Information?

Crowdsourcing systems have become a popular platform to label and classify items in large-scale databases. A team led by Prof. Hye Won Chung has discovered an efficient way of asking questions to human workers by using a technique from information theory.

Article | Special Issue

Crowdsourcing systems have become a popular platform to label and classify items in large-scale databases with applications of image labeling, video annotation, recommendation, and data acquisition for deep learning. For example, a large dataset of movies can be classified, depending on whether each movie is suitable for kids to watch, by asking simple binary (yes/no) questions about each item to human workers in crowdsourcing systems. However, since workers in the systems are often not experts and may provide incorrect answers, the challenge is to infer the correct object labels from noisy answers provided by workers of unknown reliabilities. In particular, the important question is how to design queries and inference algorithms that can guarantee the recovery of the correct labels of the items at the minimum number of queries.

A team of information scientists led by Prof. Hye Won Chung in the School of Electrical Engineering has recently discovered an efficient way of asking questions to human workers in the crowdsourcing system, by using a technique from information theory. The main idea is to ask binary queries that may extract the maximum amount of information. For this purpose, the team has suggested an efficient of way of asking the “group attributes” of a set of items (instead of asking about a single item) at each querying. Some examples of such group querying include a pairwise comparison of two items, i.e., asking whether or not two items belong to the same class, or “triangle” queries, which compare three objects simultaneously.

Asking group attributes usually obtains more information per query than asking a single label, but at the same time, workers tend to provide wrong answers more often as the query becomes more complex. Therefore, the fundamental question asked by Prof. Chung’s team was whether the increased information efficiency from the more complex queries is large enough to offset the loss from the increased error probability. Chung’s group demonstrated both theoretically and empirically that asking group attributes can be more efficient than asking a single label in terms of reducing the required number of queries for correctly labeling items in a large dataset.

This research may provide further insights on how to “wisely” ask simple binary questions to people when the goal is to minimize the number of questions in discovering the core information, just like the classical game of 20 Questions but with some errors in the answers. The related research was published in the 2020 IEEE International Symposium on Information Theory (DOI: 10.1109/ISIT44484.2020.9174227).

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

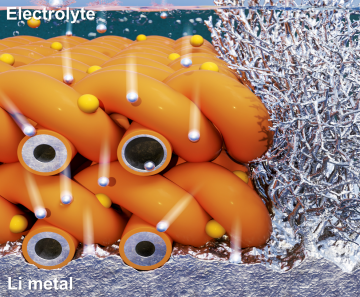

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more