KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Fall 2025 Vol. 25FR-Train: Fair and Robust Training for Trustworthy AI

FR-Train is the first holistic framework for fair and robust training, which are critical elements in Trustworthy AI, where one must train machine learning models in the presence of data bias and poisoning.

Article | Spring 2021

Prof. Steven Euijong Whang and Prof. Changho Suh’s research team developed a new machine learning framework for Trustworthy AI. The research was led by Ph.D. student Yuji Roh (advisor: Steven Euijong Whang) in collaboration with Prof. Kangwook Lee at the University of Wisconsin-Madison. This research was published under the title “FR-Train: A Mutual Information-Based Approach to Fair and Robust Training” at the International Conference for Machine Learning (ICML) 2020, a top conference in machine learning.

As machine learning becomes widespread in modern society, making AI trustworthy is becoming a critical issue. Many top companies including Google, IBM, and Microsoft recognize the importance of trustworthy AI and are putting substantial effort into developing more reliable systems. Previous machine learning systems were primarily aimed at training accurate models, but there is now an urgent social need to address multiple requirements including fairness, robustness, transparency, and explainability.

Among the requirements of trustworthy AI, the research team focuses on fairness and robustness, which are closely related issues that are affected by the same training data. For sensitive applications like healthcare, finance, and self-driving cars, a trained model must not discriminate against customers based on sensitive attributes, including age, gender, race, or religion. In addition, as applications often rely on external datasets for their training data, the model training must be resilient against noisy, subjective, or even adversarial data.

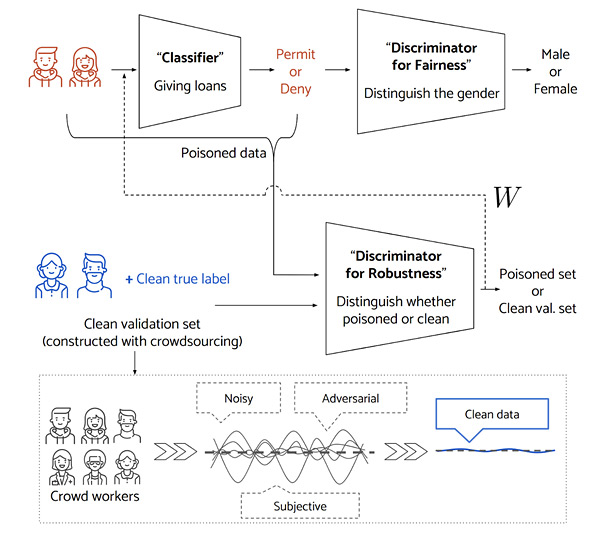

The research team has proposed a new training method called FR-Train, where fairness and robustness can be obtained within a holistic framework. FR-Train is the first framework to support both fair and robust training and is based on a novel mutual information-based interpretation. As demonstrated in Figure 1, FR-Train enjoys the synergistic effect of combining two approaches: (1) employing a fairness discriminator that distinguishes predictions w.r.t. one sensitive group from others and (2) employing a robustness discriminator that distinguishes training data with predictions from a small yet clean validation set and is also used to further improve the fairness training through example re-weighting. In various applications, FR-Train shows almost no decrease in fairness and accuracy in the presence of data poisoning by both mitigating bias and defending against poisoning.

The research team believes that FR-Train can become a foundation of future fair and robust AI systems. More information can be found in the following links.

[Links]

(1) Paper link: http://proceedings.mlr.press/v119/roh20a.html

(2) Conference video: https://icml.cc/virtual/2020/poster/6025

Most Popular

Wearable Haptics of Orthotropic Actuation for 3D Spatial Perception in Low-visibility Environment

Read more

Lighting the Lunar Night: KAIST Develops First Electrostatic Power Generator for the Moon

Read more

How AI Thinks: Understanding Visual Concept Formations in Deep Learning Models

Read more

Soft Airless Wheel for A Lunar Exploration Rover Inspired by Origami and Da Vinci Bridge Principles

Read more

TwinSpin: A Novel VR Controller Enabling In-Hand Rotation

Read more