KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24Neural-enhanced Live Streaming

Current video streaming systems suffer from a fundamental limitation that video quality heavily depends on the available bandwidth between servers and clients. Recently, deep-learning-based super-resolution has become a promising way to overcome this limitation by recovering high-quality video from lower-quality transmissions. However, existing super-resolution technologies are impractical as they are 1) too expensive to be run on commercial mobile devices and 2) require long training time that is not available in live video streaming. To tackle these problems, we developed a practical deep-learning-based video streaming system that supports both commercial mobile devices and live video. Our system is expected to drastically reduce the bandwidth requirement of emerging media such as AR, VR, 4K.

Article | Spring 2021

Professor Dongsu Han and his Ph.D. student Hyunho Yeo, Jaehong Kim, Youngmok Jung have developed a novel deep learning-driven video streaming technology, dubbed neural-enhanced video streaming, which greatly improves user quality-of-experience for on-demand and live video streams. Traditional video streaming suffers from a fundamental limitation that video quality heavily depends on the available bandwidth between servers and clients. To tackle this limitation, the research team proposed neural-enhanced video streaming in which a video client actively uses its own computing power for applying a super-resolution deep neural network (DNN) to video. This enables the recovery of high-quality video from lower-quality transmissions (e.g., 1080p from 240-720p), independent of network resources. In addition, the research team successfully incorporated neural-enhanced video streaming with various video streaming applications including adaptive streaming, live streaming, and mobile streaming. To support mobile devices, which have stringent power and computing constraints, the research team developed techniques to accelerate super-resolution by more than an order of magnitude.

The work is published at ACM SIGCOMM [1] and ACM Mobicom 2020 [2].

For more details, refer to the project websites below.

http://ina.kaist.ac.kr/~livenas/

[1] Neural-Enhanced Live Streaming: Improving Live Video Ingest via Online Learning Jaehong Kim∗ , Youngmok Jung∗, Hyunho Yeo, Juncheol Ye , and Dongsu Han, ACM SIGCOMM 2020

[2] NEMO: Enabling Neural-enhanced Video Streaming on Commodity Mobile Devices, Hyunho Yeo, Chan Ju Chong, Youngmok Jung, Juncheol Ye, and Dongsu Han, ACM MobiCom 2020

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

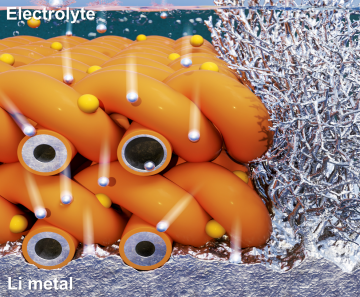

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more