KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24Emergence of number sense in untrained neural networks

A study using an artificial deep neural network shows that visual number sense can arise spontaneously in the complete absence of learning, providing new insight into the origin of innate cognitive functions in the brain.

Article | Fall 2021

Researchers have found that higher cognitive functions can arise spontaneously in untrained neural networks. A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has shown that neuronal number selectivity can arise even in completely untrained deep neural networks. This new finding provides revelatory insights into the mechanisms underlying the development of cognitive functions in both biological and artificial neural networks, also significant impacting our understanding of the origin of early brain functions before sensory experience. The study, which was published in Science Advances on January 1, 2021, demonstrates that number-selective neurons are observed in randomly-initialized deep neural networks in the complete absence of learning, and that they show the single- and multi-neuron characteristics of the types observed in biological brains.

“Number sense” is an ability to estimate numbers without counting, and it provides a foundation for complicated cognitive processing in the brain. In numerically naïve animals, it was observed that individual neurons in the prefrontal cortex can respond selectively to the number of visual items. These results suggest that number-selective neurons arise spontaneously in the brain before visual experience and that they provide a foundation for innate number sense in young animals and humans. However, the details of how number neurons originate are not yet understood.

Using an artificial deep neural network that models the ventral visual stream of the brain, the research team found that number-selective neurons can spontaneously emerge in a randomly-initialized networks without learning. Moreover, the team confirmed that these number neurons show the single- and multi-neuron characteristics of the types observed in biological brains following the Weber-Fechner Law. The researchers confirmed that the responses of these neurons enable the network to perform a number comparison task, even under the condition that the numerosity in the stimulus is incongruent with low-level visual cues such as the total area or the size of visual patterns in the stimulus. This implies that observed number neurons encode the abstract numerosity of a stimulus image, independently of other visual cues.

From further investigations, the team revealed that the neuronal tuning for various levels of numerosity originated from a combination of monotonically decreasing and increasing activity units in the earlier layers, suggesting that the observed number tuning emerges from the statistical variation of feedforward projections. These findings suggest that innate cognitive functions can emerge spontaneously from the statistical complexity embedded in the hierarchical feedforward projection circuitry, even in the complete absence of learning. Overall, these results provide a broad conceptual advance as well as advanced insight into the mechanisms underlying the development of innate functions in both biological and artificial neural networks, which may unravel the mystery of the generation and evolution of intelligence.

Publication:

Gwangsu Kim, Jaeson Jang, Seungdae Baek, Min Song & Se-Bum Paik, Visual number sense in untrained deep neural networks, Science Advances 7(1), eabd6127 (2021)

Available online: https://advances.sciencemag.org/content/7/1/eabd6127

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

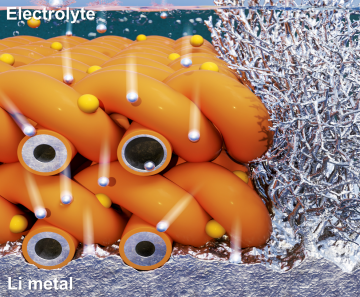

Extending the lifespan of next-generation lithium metal batteries with water

Read more

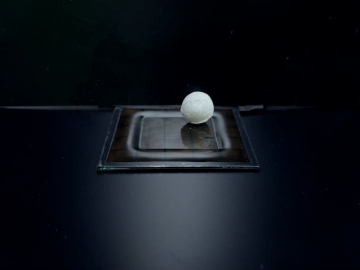

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more