KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Fall 2025 Vol. 25Super-fast and accurate monocular 3D object detection for Autonomous Driving

Super-fast and accurate monocular 3D object detection for Autonomous Driving

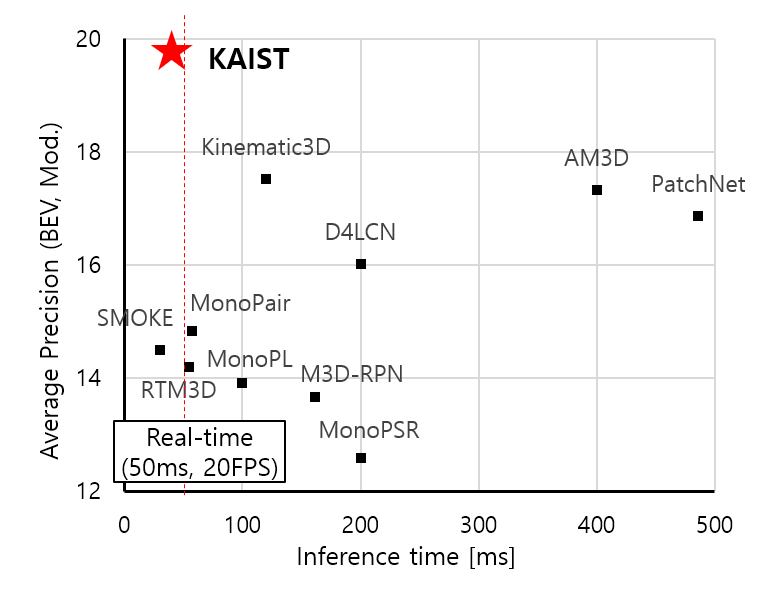

Professor Dongsuk Kum and his team have developed a super-fast and accurate 3D object detection method using a single monocular camera. Their method achieved 1st place on the KITTI monocular 3D object detection leaderboard (most widely adopted perception benchmark for autonomous driving) while running real-time, outperforming prior methods.

Article | Fall 2021

Detecting 3D objects in a 3D driving environment is an essential but very challenging perception task for autonomous driving. In particular, when only a single camera is used, detecting 3D object becomes very challenging because an image does not contain distance information. Therefore, retrieving lost 3D information from images plays a key role in monocular image-based 3D object detection.

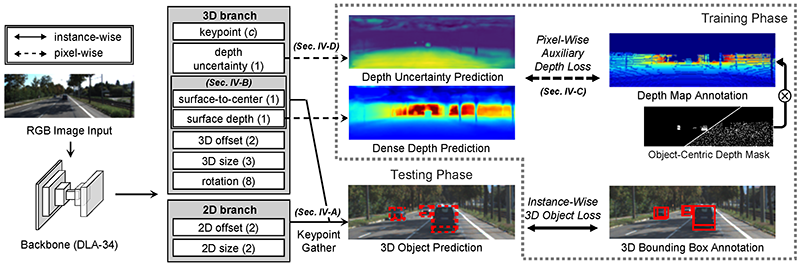

Professor Dongsuk Kum and his team have developed a super-fast and accurate 3D object detection method using a single monocular camera without the need for a large amount of 3D bounding box annotations. The method to solve the data shortage problem proposed by the research team exploits raw LiDAR points as training data in addition to a 3D bounding box to train the depth information. This allows to avoid labelling instance-level 3D bounding boxes, which is a very expensive labelling task. The research team was able to dramatically improve computational time by using image-based detector coupled with an auxiliary depth prediction network, which focuses on predicting the distance of the detected objects.

The proposed method achieved 1st place on the KITTI monocular 3D object detection leaderboard (most widely adopted perception benchmark for autonomous driving), outperforming the state-of-the-art methods by 12% while running three times faster (42ms). Moreover, it is advantageous that their method is not limited to a specific 3D detection network architecture but can be generally applied to many other networks. This research was published in IEEE Transaction on Intelligent Transportation System (T-ITS) under the title of “Boosting Monocular 3D Object Detection with Object-Centric Auxiliary Depth Supervision.

Most Popular

A New solution enabling soft growing robots to perform a variety of tasks in confined spaces

Read more

Towards a more reliable evaluation system than humans - BiGGen-Bench

Read more

Development of a compact high-resolution spectrometer using a double-layer disordered metasurface

Read more

AI-Designed carbon nanolattice: Feather-light, steel-strong

Read more

Dual‑Mode neuransistor for on‑chip liquid‑state computing

Read more