KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2024 Vol. 22Consistent visual editing of complex visual modalities: videos and 3D scenes

Diffusion models have shown remarkable performance in editing images. However, extending those capabilities to complex visuals such as videos or 3D scenes remains challenging. This work presents a unified approach that enables the editing of such complex visuals by leveraging diffusion models, marking a significant advancement in the field.

In the field of AI, text-to-image diffusion models represent a significant innovation in the creation of creative digital content. These models have transformed the process of synthesizing or manipulating images following given natural language descriptions by leveraging AI's ability to understand language context and visualize complex concepts. While they have been highly successful in generating photorealistic images, their use has been limited to still images. Then, how can these generative capabilities be extended to more complex visual modalities, such as video or 3D scenes? The challenge here is to ensure consistency between the set of images specified for each visual modality, e.g., video editing requires that the output remain temporally consistent, and 3D scene editing requires that the output remain multi-view consistent, but image-only diffusion models lack such an understanding.

To address this challenge of ensuring consistency in complex visual editing, the research paper "Collaborative Score Distillation for Consistent Visual Editing" by Subin Kim, Kyungmin Lee, and their colleagues from KAIST and Google Research presents a novel solution. The method, known as Collaborative Score Distillation (CSD), extends the capabilities of text-to-image diffusion models to more than just static images, without using modality-specific datasets. This innovation is achieved by considering a set of images as particles within the Stein Variational Gradient Descent (SVGD) framework. This strategic approach allows for the synchronized distillation of generative priors across a set of images, ensuring that edits made in one image are reflected coherently and seamlessly in all others. In essence, CSD acts as a conductor, orchestrating a harmonious symphony of edits that resonate consistently across the spectrum of visual modalities.

The practical applications and results of using CSD are both noteworthy and enlightening due to its flexibility and effectiveness in various complex editing tasks. This versatility ranges from editing panoramic images and videos to manipulating 3D scenes, where CSD enables users to edit a variety of complex visual modalities following a given language instruction in a zero-shot manner. The impact of this research is further recognized by its publication at NeurIPS 2023, one of the most prestigious academic conferences in the field of artificial intelligence.

For more information, please visit: https://subin-kim-cv.github.io/CSD/

Most Popular

An intravenous needle that irreversibly softens upon insertion by body temperature

Read more

Consistent visual editing of complex visual modalities: videos and 3D scenes

Read more

SUPPORT enables accurate optical readout of voltage signals in neurons

Read more

AI for detecting aimbots in FPS games

Read more

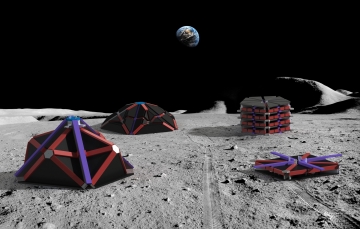

Origami-based deployable space shelter

Read more