KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Fall 2024 Vol. 23Multi-hand posture rehabilitation for stroke survivors: Rehabilitation system using vision-based intention recognition and a soft robotic glove

To assist stroke survivors in recovering hand functions, a hand rehabilitation system consisting of a vision-based hand posture intention detection algorithm and a soft robotic glove is proposed

Individuals suffering from stroke experience a rapid loss of brain function due to poor blood flow to the brain. This can result in diminished motor skills, coordination, and precision in various limbs. In particular, impaired hand functions often render stroke survivors incapable of handling objects and significantly reduce their ability to perform activities of daily living (ADLs). This inability to independently perform ADLs can greatly reduce the quality of life.

For stroke survivors, practicing various hand postures in accordance with their intentions is a crucial aspect of recovering hand functions. However, existing intention detection methods based on an analysis of biosignals such as electromyography and electroencephalogram have shown difficulties in accurately detecting intentions for multiple hand postures.

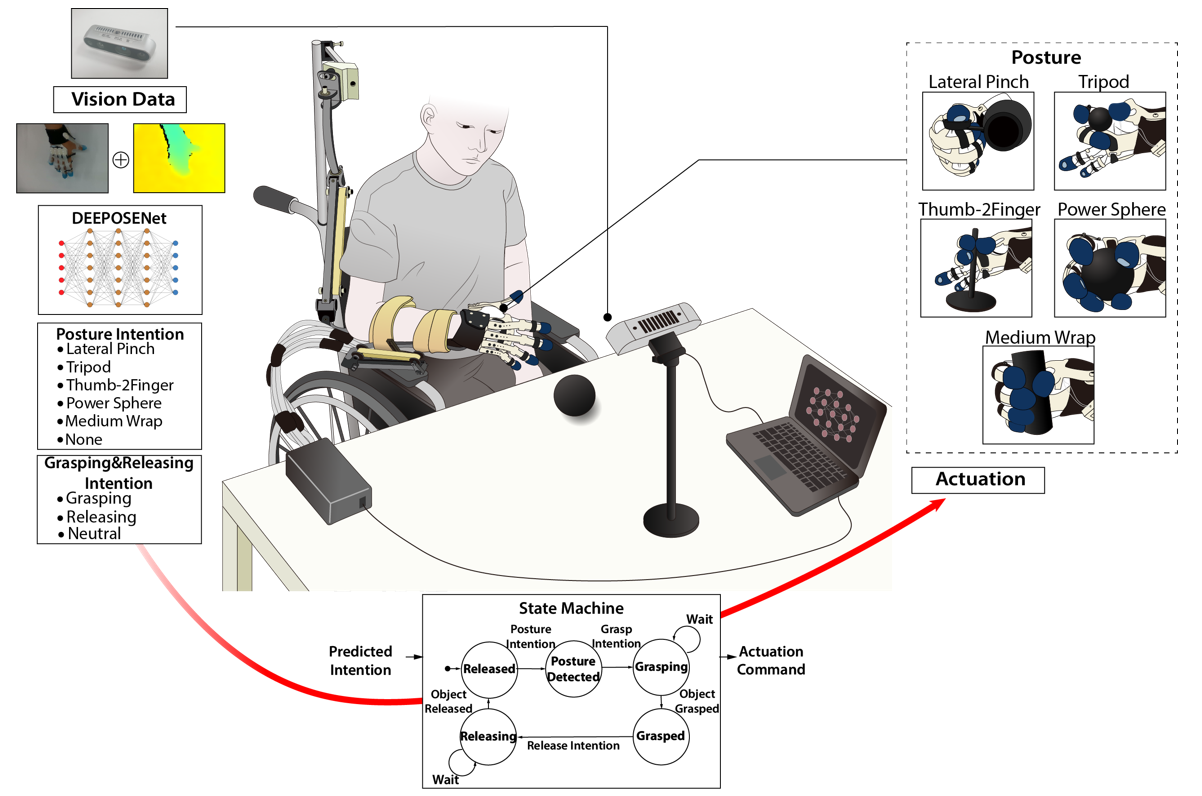

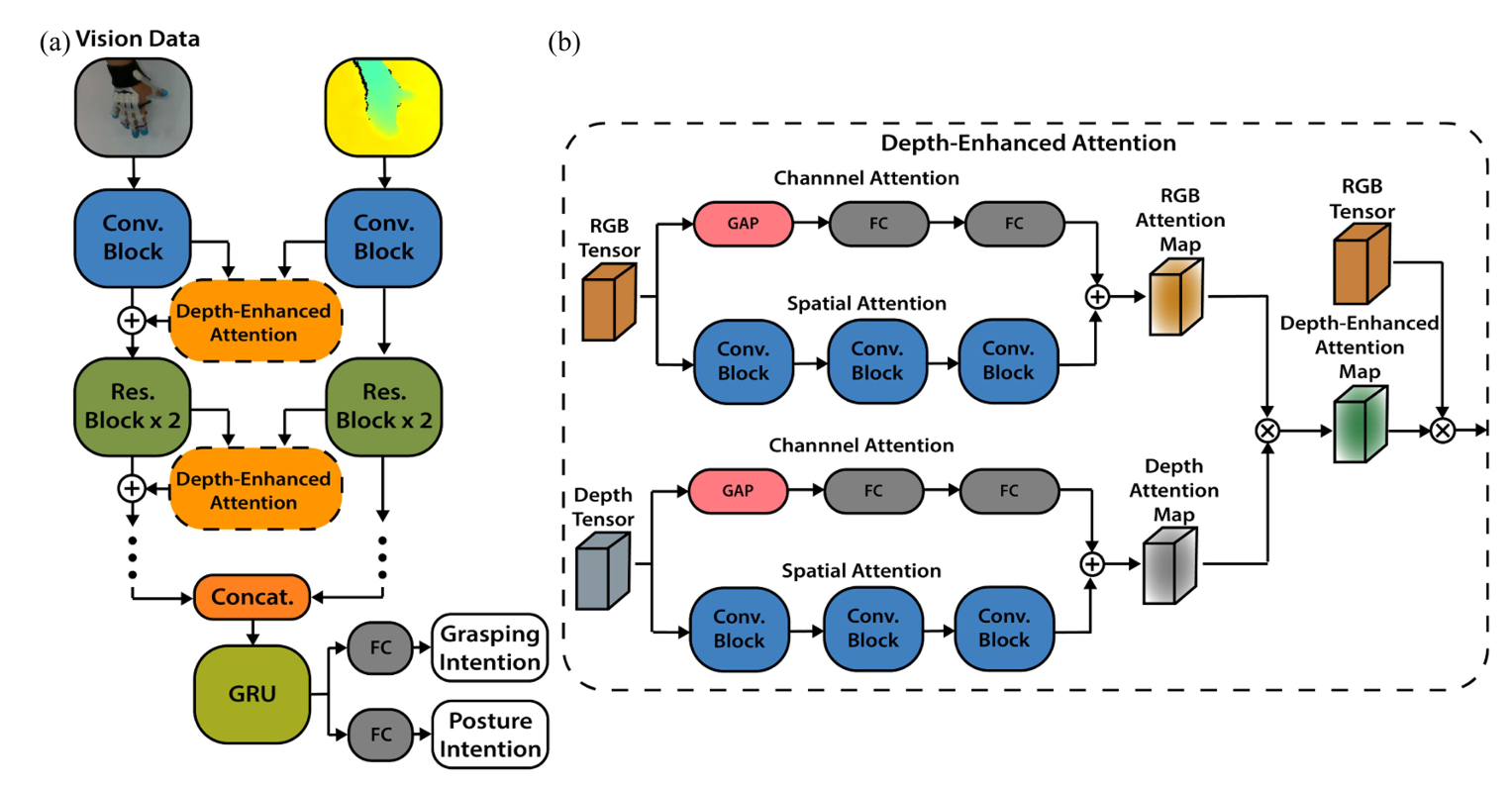

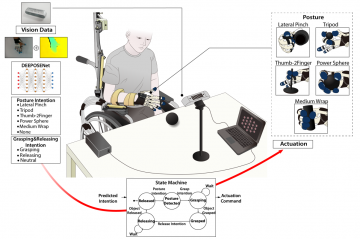

To overcome these challenges, a research team led by Professor Sungho Jo from the School of Computing and Professor Hyung-Soon Park from the Department of Mechanical Engineering proposed a hand rehabilitation system consisting of a vision-based hand intention detection algorithm with a soft wearable robotic glove. A vision-based hand intention detection model, DEpth Enhanced hand POSturE intention Network (DEEPOSE-Net), analyzes the user’s hand motions and hand-object interactions from image (RGB) and depth images to predict intentions for various hand postures. The soft wearable robot assists finger movements based on these predicted intentions.

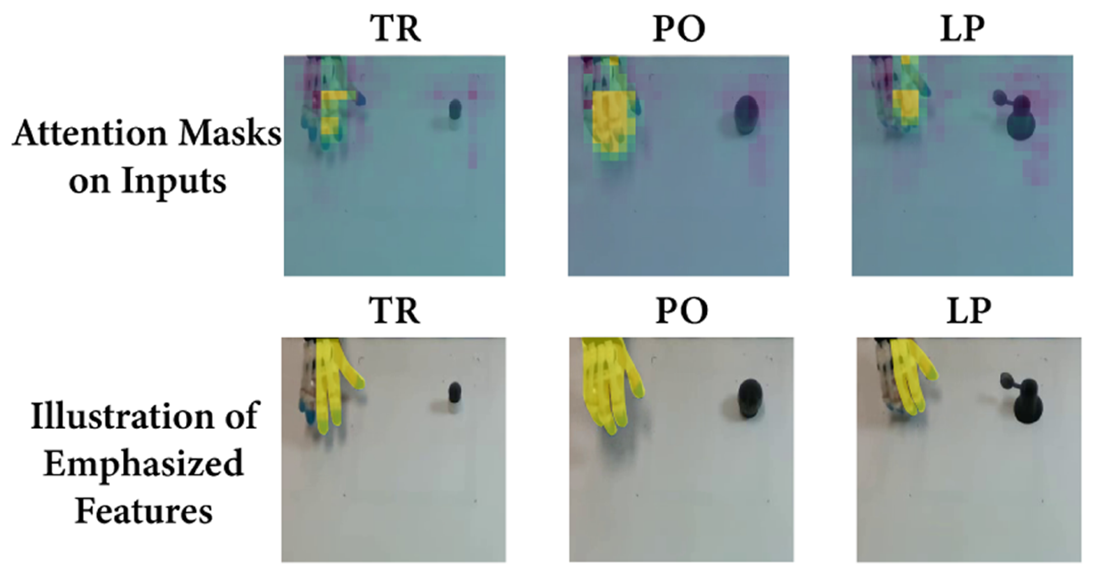

DEEPOSE-Net employs a depth-enhanced attention mechanism, which emphasizes the characteristic parts of each hand posture, to classify intentions involving multiple hand postures accurately. This attention mechanism learns the correlations between depth data and RGB data, identifying and emphasizing pertinent areas in the RGB data for predicting hand posture intentions. In particular, this mechanism emphasizes the features that distinguish visually similar postures, leading to increased accuracy in differentiating the corresponding intentions. For example, the tripod posture used for grasping small objects from above and the power sphere posture for grasping large objects are visually similar when observed from the camera perspective. The depth-enhanced attention mechanism accurately emphasizes only the fingers used in each hand posture, allowing it to distinguish these movements more effectively.

DEEPOSE-Net demonstrated improved intention recognition accuracy compared to existing vision-based intention recognition models and biosignal-based intention recognition methods. For five different hand postures, the model trained on motions from healthy individuals achieved 80.3% intention detection accuracy when applied to stroke survivors and 90.4% accuracy for healthy individuals. Further training of the model on motions from stroke survivors may improve the accuracy for detecting the hand posture intentions of these users. This research has been recognized for its achievements in interdisciplinary studies in computer science and published in the April issue of IEEE Transactions on Industrial Informatics. (Title: Multiple Hand Posture Rehabilitation System using Vision-Based Intention Detection and Soft Robotic Glove)

Most Popular

High-performance and sustainable paper coating material that prevents microplastics

Read more

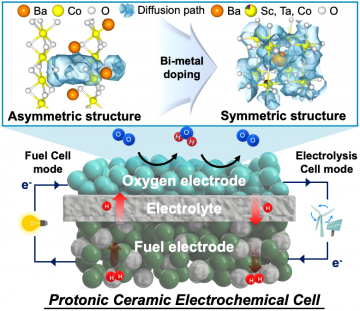

Next-generation ceramic electrochemical cells for global net-zero goals

Read more

Multi-hand posture rehabilitation for stroke survivors: Rehabilitation system using vision-based intention recognition and a soft robotic glove

Read more

Impacts of new town developments on carbon sinks in the Seoul metropolitan area

Read more

Revolutionizing strength: Hercules artificial muscles 17 times stronger than human muscles

Read more