KAIST

BREAKTHROUGHS

Research Webzine of the KAIST College of Engineering since 2014

Spring 2025 Vol. 24Development of a deep-learning processor for artificial intelligence

Development of a deep-learning processor for artificial intelligence

An energy-efficient scalable instruction-set processor is developed for artificial intelligence (AI) systems. The AI processor is designed based on instruction-set architecture specialized for deep-learning models, thereby implementing various kinds of recognition algorithms efficiently without hardware modifications.

Article | Spring 2018

From advanced driver-assistance systems to language translators, artificial intelligence (AI) systems have been introduced in a diverse range of devices in our daily lives by virtue of their unprecedented recognition rates. As of now, it is impractical to embed deep-learning algorithms targeting for real-time applications into energy-limited devices. First, as the computational complexity of AI models increases, processing these models consumes a huge amount of energy and time. Application-specific integrated circuits have been employed in academia to cope with such complicated algorithms, but they are only applicable to predefined AI models due to low configurability.

Moreover, the aforementioned structures are single-chip solutions, so that the development of a new chip is inevitable whenever a more sophisticated algorithm is developed, requiring an enormous amount of manufacturing cost and time.

Recently, a research team led by Professor In-Cheol Park presented a novel architecture in order to overcome those limitations and open up a new vista for AI processors. A new processor called a deep-learning specific instruction-set processor (DSIP) is designed to achieve high energy efficiency and scalability. The DSIP allows programming of a variety of AI models, such as convolutional neural networks (CNNs), recurrent neural networks, and long short-term memory networks, without hardware modifications.

In particular, the DSIP is efficient in realizing deep CNNs, which have been indispensable in the field of object recognition since 2012. In conventional AI processors, the on-chip memory accesses that occur during convolution account for over 50% of the total energy consumption. A new convolution method proposed for the DSIP, which is called incremental convolution, reduces the number of on-chip memory accesses significantly by computing the outputs incrementally, enhancing energy efficiency in turn.

Apart from energy consumption, the long recognition time is also a major hindrance for practical implementation. Previous research has focused on parallelizing neural network operations with more processing elements. However, their performance gains reached the limit due to the incredible amount of computations and parameters used in recent deep-learning models. Thus, the DSIP is built upon a master-servant instruction-set architecture taking into account these circumstances: the master is responsible for transmitting the parameters from an off-chip memory to the DSIP, and the servant performs neural network operations on the data received from the master by using multiple DSIP arithmetic logic units (DALUs). Due to the division of labor between the master core and the servant core, the DSIP achieves additional speedup by overlapping off-chip data transmissions and computational operations.

The current AI processors are inadequate to meeting the real-time requirements of complex deep-learning models. The processors need to be redesigned to accommodate more complex deep-learning models in real time. To mitigate the manufacturing cost, the researchers took a different approach to maximize the throughput, using multiple DSIPs to build a system. As a deep-learning model consists of several small neural network models, the DSIP supports scalability by providing relevant instructions in the master core, and the instructions make it possible to connect multiple DSIPs in a form of 1D or 2D chain structure. Since multiple DSIPs process small models in parallel, the whole system can be regarded as a distributed computing system.

A prototype chip built in a 65 nm CMOS process achieves high energy efficiency up to 125.4 GMACS/W for the AlexNet model, which is 2.2 times higher than that of [1]. This demonstrates that a practical recognition processor can be designed based on the DSIP, and it is expected to become a key element of AI systems in the area of autonomous vehicles and cloud computing.

[1] Y. –H. Chen et al., “Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks,” in IEEE ISSCC, 2016, pp. 262–264.

[2] G. Desoli et al., “A 2.9TOPS/W deep convolutional neural network SoC in FD-SOI 28nm for intelligent embedded systems,” in IEEE ISSCC, 2017, pp. 238–240.

Original paper: http://ieeexplore.ieee.org/document/8103908/

Most Popular

When and why do graph neural networks become powerful?

Read more

Smart Warnings: LLM-enabled personalized driver assistance

Read more

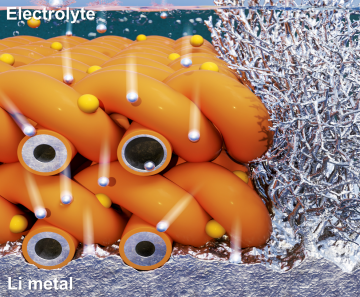

Extending the lifespan of next-generation lithium metal batteries with water

Read more

Professor Ki-Uk Kyung’s research team develops soft shape-morphing actuator capable of rapid 3D transformations

Read more

Oxynizer: Non-electric oxygen generator for developing countries

Read more